My Home Lab Kubernetes Cluster using Containerd

In this first post of my Kubernetes section- I am simply going to document my current Kubernetes Cluster deployment. This is, for sure, a collection of instructions that I have come to over hours of deployment- utilizing found content and working with my friends here in the NW tech community. If you are new to Kubernetes, be sure to find others like you that have started or are looking to learn. Kubernetes is a big world, and my time working with others on these projects has been invaluable.

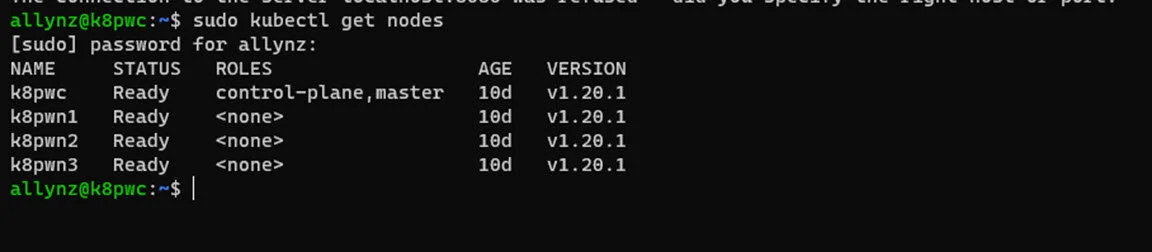

This deployment will concentrate on an on-premises install. Running on KLAND- which is my NUC datacenter. It consists of 4 Ubuntu 20.04 VMs, where the first acts as a controller and the other 3 as the cluster nodes. The controller will not run any workloads. I hope to post an additional write-up, sometime in the new year, to add an additional controller.

I also decided to forget Docker on this install. Docker has become deprecated on Kubernetes as of late. Now, I am not too worried, as Docker will be supported for some time, but as I am fairly new to Kubernetes I decided to learn how to go forward without it. Plus, it is one less thing to go wrong. So, I used Containerd instead. Let’s get into it.

Deploying Four Ubuntu 20.04 VMs

I first deployed 4 Ubuntu VMs in Vsphere to get started.

I won’t go into the complete setup here as it was the basic Ubuntu install. Make sure SSH is installed. After these were all deployed I took a few extras steps I took on each VM. So go ahead and SSH in to each.

First, Install open-vm-tools.

sudo apt update

sudo apt install open-vm-tools -y

Then Disable swap.

Kubelet/Kubernetes currently does not work with swap enabled on Linux.

sudo swapoff --all

sudo sed -ri '/\sswap\s/s/^#?/#/'/etc/fstab

Next, some additional tools and updates

First install some tools and check for updates.

sudo apt update

sudo apt install -y \

apt-transport-https \

ca-certificates \

curl \

gnupg-agent \

software-properties-common \

nfs-common \

open-iscsi \

multipath-tools

sudo apt upgrade -y

sudo apt autoremove -y

Linux Networking

Next, I set static IP addresses. DHCP, does exist in my network, but my previous Kubernetes deployments had trouble when those DHCP IPs didnt hold. Up to you, but I find it much less troublesome once these addresses were defined. Note, if you are familiar with Linux network in the days of ol’ but maybe it has been awhile, the process has changed. It has graduated to using a yaml file. So, to compelte this task, change the following to match your network. As these are an example of the test lab used in my setup.

sudo vi /etc/netplan/99-netcfg-vmware.yaml

# Generated by VMWare customization engine.

network:

version: 2

renderer: networkd

ethernets:

ens192:(Make sure to match this to your ethernet when you frst access the yaml file)

dhcp4: no

addresses:

- 10.7.0.201/24

gateway4: 10.7.0.1

dhcp6: yes

dhcp6-overrides:

use-dns: false

nameservers:

search:

- kland.local

addresses:

- 10.7.0.100

- 8.8.8.8

Now, Great Time to Create a Backup

At this point I thought it would be a great idea to make a backup. And of course I utilized my favorite backup tool Veeam. This helped tremendously when I made mistakes going forward. I suggest you take a look at Veeam. You can even utilize the Community Edition for free. https://www.veeam.com/virtual-machine-backup-solution-free.html

Installing the containerd runtime

Now, we should have 4 updated and ready to roll, Ubuntu VMs. Next step is to install the container run-time service. As mentioned previously, I will be forgoing Docker for Containerd. To install I ran the following on the each VM.

Update apt with the command:

sudo apt-get update

Then

sudo apt-get upgrade -y

Install containerd with the following

sudo apt-get install containerd -y

sudo mkdir -p /etc/containerd

sudo su -

containerd config default /etc/containerd/config.toml

Deploy Kubernetes with Kubeadm

Install Kubeadm, Kubelet, and Kubectl on All Nodes

Adding the Google cloud package repository to your sources.

curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

cat <<EOF | sudo tee /etc/apt/sources.list.d/kubernetes.list

deb https://apt.kubernetes.io/ kubernetes-xenial main

EOF

sudo apt update

Install the components.

sudo apt install -y kubelet kubeadm kubectl

Hold version. This will keep us from unintentionally updating the cluster, or getting the versions out of sync between nodes.

sudo apt-mark hold kubelet kubeadm kubectl

Time to fix a few things with containerd

With containerd it is necessary to make some manual changes that Docker, a would have just handled. But hey, we will have one less thing to go wrong with our cluster when we are finished.

The first add a line to the file /etc/sysctl.conf.

sudo nano /etc/sysctl.conf

At the bottom add:

net.bridge.bridge-nf-call-iptables = 1

Save and close.

Next, issue the commands:

sudo -s

sudo echo '1' > /proc/sys/net/ipv4/ip_forward

exit

Reload the configurations:

sudo sysctl --system

Then load the following modules:

sudo modprobe overlay

sudo modprobe br_netfilter

Start the Cluster on Controller Node

sudo kubeadm init

When done you should see something such as

Your Kubernetes control-plane has initialized successfully!

Run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.1.231:6443 --token tc38rh.ic3xx9mggovgg46m \

--discovery-token-ca-cert-hash sha256:4cf9ff444fd92427de3faaaaaa6debdb0ebdb33f9bcd50d04f3a060e76307738

So... do what it says; run the following:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Then choose and deploy a pod network. I chose Calico which I ran utilizing the following.

curl https://docs.projectcalico.org/manifests/calico.yaml -O

kubectl apply -f calico.yaml

Add Nodes to Cluster

Copy the "kubeadm join..." command from the output of running kubeadm init.

And run this on each of the three worker nodes. It will look similar to the following- but with your specific information.

sudo kubeadm join (yourIp) --token tc38rh.ic3xx9mggovgg46m \

--discovery-token-ca-cert-hash sha256:4cf9ff444fd92427de3faaaaaa6debdb0ebdb33f9bcd50d04f3a060e76307738

Verify Cluster

Run kubectl get nodes and wait for them to go from NotReady to Ready status.

kubectl get nodes